Recently I have to deal with data pipeline with large volumn of data. I was using python script first to make plenty of sessions query for each row of data because of cascading query between different tables. This caused bottleneck for only 400,000 rows of data which required almost 2 hrs of processing time. I have used different approches like batch querying the dimension table or even async programming to separate the compute and insert operation. But both turns out to be a not quite well performance. So I decided to turn to spark.

What you will learn from this blog?

- I will take a note on how to use

aws EMRfor quick set up cluster for computing purpose. - some basic computing skill with

sparkin scala.

What is EMR?

EMR stands for Elastic Mapreduce.

How ?

You will have 3 options for submitting your jobs on the cluster.

- via the web-ui

- using the awscli

- restful api

What can we do in this platform?

Streaming? ETL? Machine Learning?

Choosing instances

- Master: coordinating different components inside the cluster.

- Core:

- Task:

instance type:

- c4.xxxx: compute optimized

- Batch processing workloads

- Media transcoding

- High-performance web servers

- High-performance computing (HPC)

- Scientific modeling

- Dedicated gaming servers and ad serving engines Machine learning inference and other compute-intensive applications

- d2.xxxx

- i3.xxxx: storage optimized.

- m4.xxxx: general purpose

- r4.xxxx

https://amazonaws-china.com/ec2/instance-types/

Pricing

- Spot instance

- Price will be adjusted due to the current highest price in the current area.(Take about 5 to 10 minutes to set up)

- On demand instance

- Price is static.(Set up immediately)

how to run a script when boostraping?

add a custom jar reside at s3://region.elasticmapreduce/libs/script-runner/script-runner.jar and set the arguments.

Some thoughts that I have during using aws EMR?

-

how does data map from s3 to the instance(aka how does aws handle data stored in s3 to be read from instance)?

-

In the world of python, data should be better arraged in the form of dictionary while in the context of spark, data should be better thought of as

RDDin some sense. -

How does spot fleets work?

-

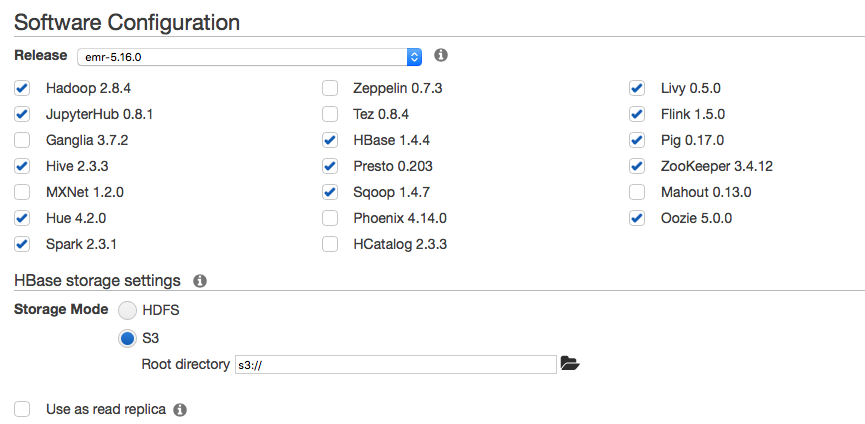

HBase on Amazon

Reference

- http://docs.amazonaws.cn/en_us/emr/latest/ManagementGuide/emr-mgmt.pdf

- https://docs.aws.amazon.com/zh_cn/AWSEC2/latest/UserGuide/spot-fleet.html

- https://docs.amazonaws.cn/emr/latest/ReleaseGuide/emr-release.pdf#emr-hbase-s3

- https://docs.amazonaws.cn/emr/latest/ReleaseGuide/emr-hbase-s3.html#emr-hbase-s3-read-replica